This weblog was written in collaboration with Yuqing Gao, Jian Tan, Fan Bu, Ali Dabir, Hamid Amini, Doosan Jung, Yury Sokolov, Lei Jin, and Derek Engi.

LLMs can sound very convincing, however in community operations, sounding proper isn’t sufficient.

Community operations are dominated by structured telemetry, lengthy configuration states, time sequence at scale, and investigations that sprawl throughout units, websites, and domains. The sensible constraint is just not whether or not an AI mannequin can reply a networking query in isolation. It’s whether or not the AI system can purpose over actual operational knowledge, perceive the context of your community and enterprise, protect the small print that change outcomes, and stay dependable throughout multi-turn interactions—together with troubleshooting.

That establishes a transparent requirement for technical and enterprise resolution makers: in order for you AI to help community operations, it have to be engineered for networking knowledge and networking workflows, not tailored after the very fact.

The Cisco Deep Community Mannequin is fine-tuned and skilled for that actuality. It’s a networking-specialized mannequin designed to purpose like an skilled operator. In deployment, it may be paired with Analytics Context Engineering (ACE) and Light-weight Autonomous Program Synthesis and Execution (LAPSE), two model-agnostic improvements that scale context and machine-data dealing with. Collectively, they help operator-grade reasoning at enterprise scale, delivering quicker, responses grounded in proof with context preserved throughout turns so investigations don’t degrade into truncation, looping, or guesswork.

After studying this submit, you’ll scroll away figuring out (1) what the Cisco Deep Community Mannequin is, (2) why general-purpose fashions battle in community operations, and (3) the 2 breakthroughs that make it sensible at scale: ACE and LAPSE.

Off the shelf LLMs don’t maintain up in networking workflows

Basic-purpose fashions are sturdy at summarization, dialog, and broad data retrieval. Community operations stress a unique set of constraints.

The info doesn’t match. Even routine investigations contain lengthy time-series home windows, plenty of counters, packet loss and latency throughout areas, large config sections, and logs from many units. Off-the-shelf fashions hit context limits quick, then begin dropping info or counting on shortcuts.

Combined knowledge will get mangled. Networking work isn’t simply textual content. It’s telemetry, JSON, syslog, CLI output, config snippets, and ticket context collectively. Even with huge context home windows, many frontier fashions are optimized for human language, not machine knowledge, to allow them to lose observe of the precise timestamp, interface, coverage, or metric change that makes the basis trigger apparent.

The Cisco Deep Community Mannequin begins with a unique assumption: don’t drive the mannequin to learn every little thing. As an alternative, construct a system that may deal with machine knowledge at scale, protect investigative context with out bloat, and transfer by way of troubleshooting like an skilled would.

So, what’s the Cisco Deep Community Mannequin?

The Cisco Deep Community Mannequin is a purpose-built mannequin for networking, designed to help troubleshooting, configuration, and automation with increased precision than general-purpose fashions. The intent is to not create a greater chatbot. The intent is to create a mannequin that behaves like a seasoned community operator: grounded in proof, disciplined in troubleshooting, and in a position to converge on root trigger and remediation with clear traceability.

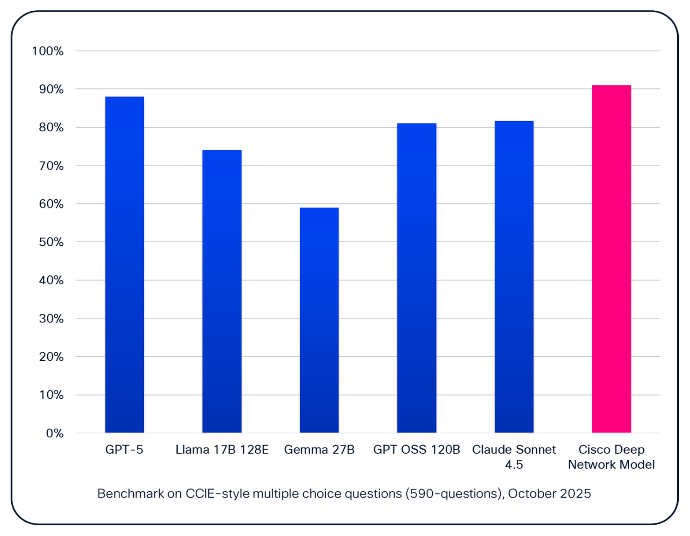

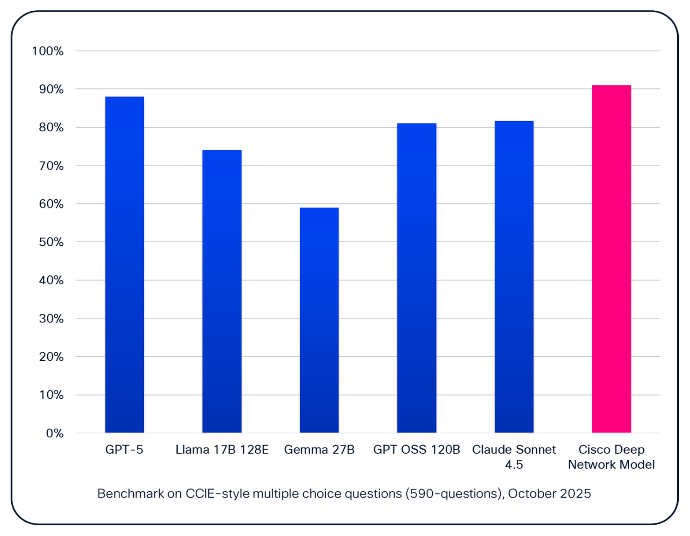

Benchmark outcomes for the Cisco Deep Community mannequin mirror this specialization. On a CCIE-style a number of alternative benchmarkCisco’s mannequin outperforms general-purpose fashions by up-to-20 p.c.

At first look, a few of these variations might seem incremental. In observe, they don’t seem to be. As soon as a mannequin surpasses roughly 85 p.c, the remaining errors have a tendency to pay attention in uncommon, complicated edge instances quite than frequent patterns. Enhancing efficiency at that degree requires addressing the lengthy tail of networking situations that general-purpose fashions usually miss.

An analogy is helpful right here: every further level past that threshold is corresponding to an elite athlete shaving fractions of a second off a world report. The hassle will increase sharply as a result of the work shifts from broad functionality enhancements to resolving the toughest, least frequent instances. That is the place domain-specific coaching, skilled vetting, and operational grounding make a significant distinction.

Trusted coaching and steady studying

The mannequin is constructed on a basis of Cisco U courseware and CCIE-level data representing greater than 40 years of operational perception. The mannequin has been skilled on almost 100 million tokens, and Cisco specialists have contributed 1000’s of reasoning traces, meticulously annotating and validating every layer of logic so the mannequin learns not simply the reply, however the operator-grade path to get there.

Networks additionally evolve constantly, and the Cisco Deep Community Mannequin is designed to evolve with them. Via reinforcement studying, it adapts utilizing new knowledge and private, real-world Technical Help Heart (TAC) and Buyer Expertise (CX) insights solely accessible inside Cisco, so the mannequin improves as operational patterns, software program, and environments change.

Optimizing LLM efficiency for machine knowledge: ACE and LAPSE

The Cisco Deep Community Mannequin is greater than a skilled mannequin. It’s delivered as a system that mixes area reasoning with context administration and machine-data execution—constructed to beat the 2 constraints that break most deployments: (1) context scale and (2) machine knowledge scale.

Analytics Context Engineering (ACE)

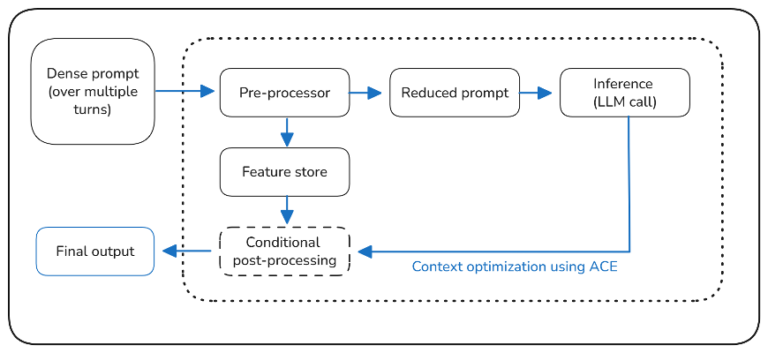

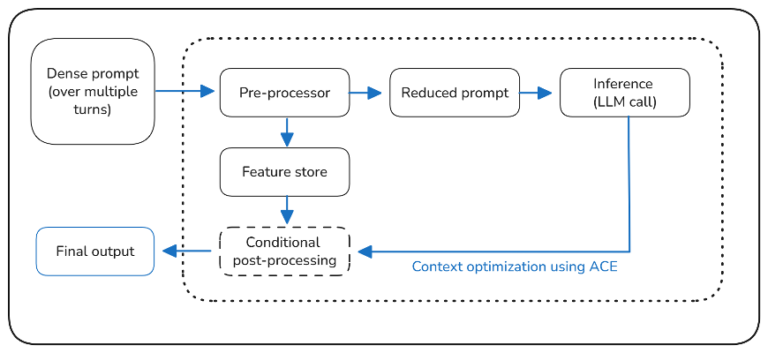

ACE transforms a dense immediate into compact canonical views and reconstructs it utilizing the fewest potential tokens. The objective is just not summarization that discards element. The objective is to cut back the variety of tokens the LLM has to course of with out dropping what issues, so it might probably keep context throughout data-heavy, multi-turn investigations and preserve the working immediate inside the mannequin’s context window. Virtually, this implies normalizing blended inputs akin to telemetry summaries, log excerpts, config deltas, and ticket notes right into a constant investigation report that stays usable over time.

This issues as a result of investigations naturally snowball. Each flip provides repeated historical past, partial artifacts, mixed-format proof, and competing hypotheses. Over time, even an accurate mannequin can change into much less dependable as a result of the enter turns into much less usable. ACE is designed to maintain the investigation compact, steady, and devoted to the underlying proof.

Cisco stories that ACE can scale back immediate measurement by roughly 20 to 90 p.c whereas preserving the knowledge the mannequin wants to remain correct. Off-the-shelf approaches sometimes handle solely about 0 to 30 p.c discount earlier than crucial particulars begin to drop. In sensible phrases, that is what retains multi-turn work constant quite than fragile.

Need the technical particulars behind Analytics Context Engineering? This weblog goes deeper.

Light-weight Autonomous Program Synthesis and Execution (LAPSE)

LAPSE takes a unique method to scale. When the enter is massive machine knowledge, the system performs on-demand instrument creation and execution to rework knowledge from a supply schema right into a goal schema optimized for the duty. The mannequin receives task-ready outputs quite than uncooked telemetry dumps, which retains the workflow quick and reduces the danger of lacking crucial alerts.

This can be a pragmatic design alternative. Time sequence and high-volume telemetry are higher dealt with by instruments that mixture, filter, reshape, and compute. The mannequin ought to information what must be computed and easy methods to interpret it, not act because the compute engine itself.

LAPSE allows the mannequin to deal with virtually limitless machine knowledge, by accelerating machine knowledge processing for interactive operational duties, turning uncooked telemetry into structured, task-ready. Reported comparisons present roughly 3–5 seconds of latency (vs. 27–200 seconds for off-the-shelf options) for duties akin to machine-data schema transformation. Reported transformation accuracy is close to 100% (vs. 0–70%).

The purpose for resolution makers is easy. That is the distinction between an AI system that may sustain with an operator and one which turns each investigation right into a ready recreation.

The way it works in observe

ACE and LAPSE are complementary by design.

- LAPSE handles the heavy elevate of machine knowledge transformation shortly and deterministically.

- ACE retains the investigation state compact, steady, and usable throughout multi-turn work.

Collectively, they permit a workflow that’s tough for generic programs to maintain: (1) begin with intent, (2) pull the minimal related proof, (3) keep a constant report of what’s identified, and (4) produce outputs which can be quick sufficient and grounded sufficient to belief in manufacturing.

The mannequin additionally helps a “subsequent greatest motion” troubleshooting loop so investigations progress like skilled work: speculation, proof, refinement, and convergence on root trigger.

Dropped at life in Cisco merchandise

It is delivered to life by way of Cisco AI merchandise that operators use daily. In Cisco AI Canvas, it helps groups examine throughout domains with a coherent proof report, generate structured outputs from massive telemetry, and transfer from suspicion to validated root trigger quicker. In Cisco AI Assistant experiences, it turns natural-language intent into operator-grade reasoning and actionable subsequent steps, grounded within the telemetry and context accessible to the person.

What’s really totally different

Many distributors declare AI for networking. The Cisco Deep Community Mannequin differentiates on particular operational properties.

- Objective-built coaching and skilled vetting for networking accuracy

- Engineering for machine knowledge scale by way of Light-weight Autonomous Program Synthesis and Execution

- Lossless context optimization for lengthy investigations by way of Analytics Context Engineering

- A roadmap to adaptive troubleshooting by way of the Subsequent Finest Motion (NBA) loop.

For technical leadersthat is about correctness, auditability, and reliability at manufacturing scale. For enterprise leadersit’s about quicker convergence on root trigger, fewer lifeless ends, and a extra credible basis for agentic operations that may execute with self-discipline as a substitute of guesswork.